You can’t say you have all the answers if you haven’t asked all the questions. So, at a conference on the medical and ecological consequences of the Fukushima nuclear disaster, held to commemorate the second anniversary of the earthquake and tsunami that struck northern Japan, there were lots of questions. Questions about what actually happened at Fukushima Daiichi in the first days after the quake, and how that differed from the official report; questions about what radionuclides were in the fallout and runoff, at what concentrations, and how far they have spread; and questions about what near- and long-term effects this disaster will have on people and the planet, and how we will measure and recognize those effects.

A distinguished list of epidemiologists, oncologists, nuclear engineers, former government officials, Fukushima survivors, anti-nuclear activists and public health advocates gathered at the invitation of The Helen Caldicott Foundation and Physicians for Social Responsibility to, if not answer all these question, at least make sure they got asked. Over two long days, it was clear there is much still to be learned, but it was equally clear that we already know that the downsides of nuclear power are real, and what’s more, the risks are unnecessary. Relying on this dirty, dangerous and expensive technology is not mandatory–it’s a choice. And when cleaner, safer, and more affordable options are available, the one answer we already have is that nuclear is a choice we should stop making and a risk we should stop taking.

“No one died from the accident at Fukushima.” This refrain, as familiar as multiplication tables and sounding about as rote when recited by acolytes of atomic power, is a close mirror to versions used to downplay earlier nuclear disasters, like Chernobyl and Three Mile Island (as well as many less infamous events), and is somehow meant to be the discussion-ender, the very bottom-line of the bottom-line analysis that is used to grade global energy options. “No one died” equals “safe” or, at least, “safer.” Q.E.D.

But beyond the intentional blurring of the differences between an “accident” and the probable results of technical constraints and willful negligence, the argument (if this saw can be called such) cynically exploits the space between solid science and the simple sound bite.

“Do not confuse narrowly constructed research hypotheses with discussions of policy,” warned Steve Wing, Associate Professor of Epidemiology at the University of North Carolina’s Gillings School of Public Health. Good research is an exploration of good data, but, Wing contrasted, “Energy generation is a public decision made by politicians.”

Surprisingly unsurprising

A public decision, but not necessarily one made in the public interest. Energy policy could be informed by health and environmental studies, such as the ones discussed at the Fukushima symposium, but it is more likely the research is spun or ignored once policy is actually drafted by the politicians who, as Wing noted, often sport ties to the nuclear industry.

The link between politicians and the nuclear industry they are supposed to regulate came into clear focus in the wake of the March 11, 2011 Tohoku earthquake and tsunami–in Japan and the United States.

The boiling water reactors (BWRs) that failed so catastrophically at Fukushima Daiichi were designed and sold by General Electric in the 1960s; the general contractor on the project was Ebasco, a US engineering company that, back then, was still tied to GE. General Electric had bet heavily on nuclear and worked hand-in-hand with the US Atomic Energy Commission (AEC–the precursor to the NRC, the Nuclear Regulatory Commission) to promote civilian nuclear plants at home and abroad. According to nuclear engineer Arnie Gundersen, GE told US regulators in 1965 that without quick approval of multiple BWR projects, the giant energy conglomerate would go out of business.

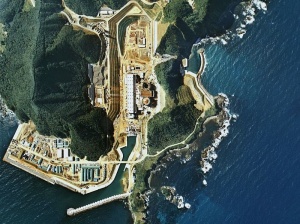

It was under the guidance of GE and Ebasco that the rocky bluffs where Daiichi would be built were actually trimmed by 10 meters to bring the power plant closer to the sea, the water source for the reactors’ cooling systems–but it was under Japanese government supervision that serious and repeated warnings about the environmental and technological threats to Fukushima were ignored for another generation.

Failures at Daiichi were completely predictable, observed David Lochbaum, the director of the Nuclear Safety Project at the Union of Concerned Scientists, and numerous upgrades were recommended over the years by scientists and engineers. “The only surprising thing about Fukushima,” said Lochbaum, “is that no steps were taken.”

The surprise, it seems, should cross the Pacific. Twenty-two US plants mirror the design of Fukushima Daiichi, and many stand where they could be subject to earthquakes or tsunamis. Even without those seismic events, some US plants are still at risk of Fukushima-like catastrophic flooding. Prior to the start of the current Japanese crisis, the Nuclear Regulatory Commission learned that the Oconee Nuclear Plant in Seneca, South Carolina, was at risk of a major flood from a dam failure upstream. In the event of a dam breach–an event the NRC deems more likely than the odds that were given for the 2011 tsunami–the flood at Oconee would trigger failures at all four reactors. Beyond hiding its own report, the NRC has taken no action–not before Fukushima, not since.

The missing link

But it was the health consequences of nuclear power–both from high-profile disasters, as well as what is considered normal operation–that dominated the two days of presentations at the New York Academy of Medicine. Here, too, researchers and scientists attempted to pose questions that governments, the nuclear industry and its captured regulators prefer to ignore, or, perhaps more to the point, omit.

Dr. Hisako Sakiyama, a member of the Fukushima Nuclear Accident Independent Investigation Commission, has been studying the effects of low-dose radiation. Like others at the symposium, Dr. Sakiyama documented the linear, no-threshold risk model drawn from data across many nuclear incidents. In essence, there is no point at which it can be said, “Below this amount of radiation exposure, there is no risk.” And the greater the exposure, the greater the risk of health problems, be they cancers or non-cancer diseases.

Dr. Sakiyama contrasted this with the radiation exposure limits set by governments. Japan famously increased what it called acceptable exposure quite soon after the start of the Fukushima crisis, and, as global background radiation levels increase as a result of the disaster, it is feared this will ratchet up what is considered “safe” in the United States, as the US tends to discuss limits in terms of exposure beyond annual average background radiation. Both approaches lack credibility and expose an ugly truth. “Debate on low-dose radiation risk is not scientific,” explained Sakiyama, “but political.”

And the politics are posing health and security risks in Japan and the US.

Akio Matsumura, who spoke at the Fukushima conference in his role as founder of the Global Forum of Spiritual and Parliamentary Leaders for Human Survival, described a situation at the crippled Japanese nuclear plant that is much more perilous, even today, than leaders are willing to acknowledge. Beyond the precarious state of the spent fuel pool above reactor four, Matsumura also cited the continued melt-throughs of reactor cores (which could lead to a steam explosion), the high levels of radiation at reactors one and three (making any repairs impossible), and the unprotected pipes retrofitted to help cool reactors and spent fuel. “Probability of another disaster,” Matsumura warned, “is higher than you think.”

Matsumura lamented that investigations of both the technical failures and the health effects of the disaster are not well organized. “There is no longer a link between scientists and politicians,” said Matsumura, adding, “This link is essential.”

The Union of Concerned Scientists’ Lochbaum took it further. “We are losing the no-brainers with the NRC,” he said, implying that what should be accepted as basic regulatory responsibility is now subject to political debate. With government agencies staffed by industry insiders, “the deck is stacked against citizens.”

Both Lochbaum and Arnie Gundersen criticized the nuclear industry’s lack of compliance, even with pre-Fukushima safety requirements. And the industry’s resistance undermines nuclear’s claims of being competitive on price. “If you made nuclear power plants meet existing law,” said Gundersen, “they would have to shut because of cost.”

But without stronger safety rules and stricter enforcement, the cost is borne by people instead.

Determinate data, indeterminate risk

While the two-day symposium was filled with detailed discussions of chemical and epidemiologic data collected throughout the nuclear age–from Hiroshima through Fukushima–a cry for more and better information was a recurring theme. In a sort of wily corollary to “garbage in, garbage out,” experts bemoaned what seem like deliberate holes in the research.

Even the long-term tracking study of those exposed to the radiation and fallout in Japan after the atomic blasts at Hiroshima and Nagasaki–considered by many the gold-standard in radiation exposure research because of the large sample size and the long period of time over which data was collected–raises as many questions as it answers.

The Hiroshima-Nagasaki data was referenced heavily by Dr. David Brenner of the Center for Radiological Research, Columbia University College of Physicians and Surgeons. Dr. Brenner praised the study while using it to buttress his opinion that, while harm from any nuclear event is unfortunate, the Fukushima crisis will result in relatively few excess cancer deaths–something like 500 in Japan, and an extra 2,000 worldwide.

“There is an imbalance of individual risk versus overall anxiety,” said Brenner.

But Dr. Wing, the epidemiologist from the UNC School of Public Health, questioned the reliance on the atom bomb research, and the relatively rosy conclusions those like Dr. Brenner draw from it.

“The Hiroshima and Nagasaki study didn’t begin till five years after the bombs were dropped,” cautioned Wing. “Many people died before research even started.” The examination of cancer incidence in the survey, Wing continued, didn’t begin until 1958–it misses the first 13 years of data. Research on “Black Rain” survivors (those who lived through the heavy fallout after the Hiroshima and Nagasaki bombings) excludes important populations from the exposed group, despite those populations’ high excess mortality, thus driving down reported cancer rates for those counted.

The paucity of data is even more striking in the aftermath of the Three Mile Island accident, and examinations of populations around American nuclear power plants that haven’t experienced high-profile emergencies are even scarcer. “Studies like those done in Europe have never been done in the US,” said Wing with noticeable regret. Wing observed that a German study has shown increased incidences of childhood leukemia near operating nuclear plants.

There is relatively more data on populations exposed to radioactive contamination in the wake of the Chernobyl nuclear accident. Yet, even in this catastrophic case, the fact that the data has been collected and studied owes much to the persistence of Alexey Yablokov of the Russian Academy of Sciences. Yablokov has been examining Chernobyl outcomes since the early days of the crisis. His landmark collection of medical records and the scientific literature, Chernobyl: Consequences of the Catastrophe for People and the Environment, has its critics, who fault its strong warnings about the long-term dangers of radiation exposure, but it is that strident tone that Yablokov himself said was crucial to the evolution of global thinking about nuclear accidents.

Because of pressure from the scientific community and, as Yablokov stressed at the New York conference, pressure from the general public, as well, reaction to accidents since Chernobyl has evolved from “no immediate risk,” to small numbers who are endangered, to what is now called “indeterminate risk.”

Calling risk “indeterminate,” believe it or not, actually represents a victory for science, because it means more questions are asked–and asking more questions can lead to more and better answers.

Yablokov made it clear that it is difficult to estimate the real individual radiation dose–too much data is not collected early in a disaster, fallout patterns are patchy and different groups are exposed to different combinations of particles–but he drew strength from the volumes and variety of data he’s examined.

Indeed, as fellow conference participant, radiation biologist Ian Fairlie, observed, people can criticize Yablokov’s advocacy, but the data is the data, and in the Chernobyl book, there is lots of data.

Complex and consequential

Data presented at the Fukushima symposium also included much on what might have been–and continues to be–released by the failing nuclear plant in Japan, and how that contamination is already affecting populations on both sides of the Pacific.

Several of those present emphasized the need to better track releases of noble gasses, such as xenon-133, from the earliest days of a nuclear accident–both because of the dangers these elements pose to the public and because gas releases can provide clues to what is unfolding inside a damaged reactor. But more is known about the high levels of radioactive iodine and cesium contamination that have resulted from the Fukushima crisis.

In the US, since the beginning of the disaster, five west coast states have measured elevated levels of iodine-131 in air, water and kelp samples, with the highest airborne concentrations detected from mid-March through the end of April 2011. Iodine concentrates in the thyroid, and, as noted by Joseph Mangano, director of the Radiation and Public Health Project, fetal thyroids are especially sensitive. In the 15 weeks after fallout from Fukushima crossed the Pacific, the western states reported a 28-percent increase in newborn (congenital) hypothyroidism (underactive thyroid), according to the Open Journal of Pediatrics. Mangano contrasted this with a three-percent drop in the rest of the country during the same period.

The most recent data from Fukushima prefecture shows over 44 percent of children examined there have thyroid abnormalities.

Of course, I-131 has a relatively short half-life; radioactive isotopes of cesium will have to be tracked much longer.

With four reactors and densely packed spent fuel pools involved, Fukushima Daiichi’s “inventory” (as it is called) of cesium-137 dwarfed Chernobyl’s at the time of its catastrophe. Consequently, and contrary to some of the spin out there, the Cs-137 emanating from the Fukushima plant is also out-pacing what happened in Ukraine.

Estimates put the release of Cs-137 in the first months of the Fukushima crisis at between 64 and 114 petabecquerels (this number includes the first week of aerosol release and the first four months of ocean contamination). And the damaged Daiichi reactors continue to add an additional 240 million becquerels of radioactive cesium to the environment every single day. Chernobyl’s cesium-137 release is pegged at about 84 petabecquerels. (One petabecquerel equals 1,000,000,000,000,000 becquerels.) By way of comparison, the nuclear “device” dropped on Hiroshima released 89 terabecquerels (1,000 terabecquerels equal one petabecquerel) of Cs-137, or, to put it another way, Fukushima has already released more than 6,400 times as much radioactive cesium as the Hiroshima bomb.

The effects of elevated levels of radioactive cesium are documented in several studies across post-Chernobyl Europe, but while the implications for public health are significant, they are also hard to contain in a sound bite. As medical genetics expert Wladimir Wertelecki explained during the conference, a number of cancers and other serious diseases emerged over the first decade after Chernobyl, but the cycles of farming, consuming, burning and then fertilizing with contaminated organic matter will produce illness and genetic abnormalities for many decades to come. Epidemiological studies are only descriptive, Wertelecki noted, but they can serve as a “foundation for cause and effect.” The issues ahead for all of those hoping to understand the Fukushima disaster and the repercussions of the continued use of nuclear power are, as Wertelecki pointed out, “Where you study and what you ask.”

One of the places that will need some of the most intensive study is the Pacific Ocean. Because Japan is an island, most of Fukushima’s fallout plume drifted out to sea. Perhaps more critically, millions of gallons of water have been pumped into and over the damaged reactors and spent fuel pools at Daiichi, and because of still-unplugged leaks, some of that water flows into the ocean every day. (And even if those leaks are plugged and the nuclear fuel is stabilized someday, mountain runoff from the area will continue to discharge radionuclides into the water.) Fukushima’s fisheries are closed and will remain so as far into the future as anyone can anticipate. Bottom feeders and freshwater fish exhibit the worst levels of cesium, but they are only part of the picture. Ken Beusseler, a marine scientist at Woods Hole Oceanographic Institute, described a complex ecosystem of ocean currents, food chains and migratory fish, some of which carry contamination with them, some of which actually work cesium out of their flesh over time. The seabed and some beaches will see increases in radio-contamination. “You can’t keep just measuring fish,” warned Beusseler, implying that the entire Pacific Rim has involuntarily joined a multidimensional long-term radiation study.

For what it’s worth

Did anyone die as a result of the nuclear disaster that started at Fukushima Daiichi two years ago? Dr. Sakiyama, the Japanese investigator, told those assembled at the New York symposium that 60 patients died while being moved from hospitals inside the radiation evacuation zone–does that count? Joseph Mangano has reported on increases in infant deaths in the US following the arrival of Fukushima fallout–does that count? Will cancer deaths or future genetic abnormalities, be they at the low or high end of the estimates, count against this crisis?

It is hard to judge these answers when the question is so very flawed.

As discussed by many of the participants throughout the Fukushima conference, a country’s energy decisions are rooted in politics. Nuclear advocates would have you believe that their favorite fuel should be evaluated inside an extremely limited universe, that there is some level of nuclear-influenced harm that can be deemed “acceptable,” that questions stem from the necessity of atomic energy instead of from whether civilian nuclear power is necessary at all.

The nuclear industry would have you do a cost-benefit analysis, but they’d get to choose which costs and benefits you analyze.

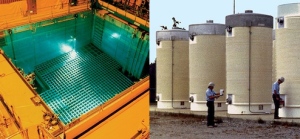

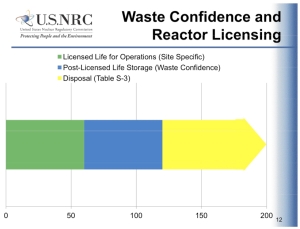

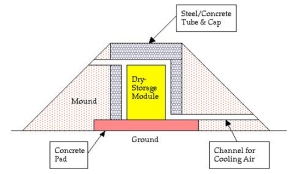

While all this time has been and will continue to be spent on tracking the health and environmental effects of nuclear power, it isn’t a fraction of a fraction of the time that the world will be saddled with fission’s dangerous high-level radioactive trash (a problem without a real temporary storage program, forget a permanent disposal solution). And for all the money that has been and will continue to be spent compiling the health and environmental data, it is a mere pittance when compared with the government subsidies, liability waivers and loan guarantees lavished upon the owners and operators of nuclear plants.

Many individual details will continue to emerge, but a basic fact is already clear: nuclear power is not the world’s only energy option. Nor are the choices limited to just fossil and fissile fuels. Nuclear lobbyists would love to frame the debate–as would advocates for natural gas, oil or coal–as cold calculations made with old math. But that is not where the debate really resides.

If nuclear reactors were the only way to generate electricity, would 500 excess cancer deaths be acceptable? How about 5,000? How about 50,000? If nuclear’s projected mortality rate comes in under coal’s, does that make the deaths–or the high energy bills, for that matter–more palatable?

As the onetime head of the Tennessee Valley Authority, David Freeman, pointed out toward the end of the symposium, every investment in a new nuclear, gas or coal plant is a fresh 40-, 50-, or 60-year commitment to a dirty, dangerous and outdated technology. Every favor the government grants to nuclear power triggers an intense lobbying effort on behalf of coal or gas, asking for equal treatment. Money spent bailing out the past could be spent building a safer and more sustainable future.

Nuclear does not exist in a vacuum; so neither do its effects. There is much more to be learned about the medical and ecological consequences of the Fukushima nuclear disaster–but that knowledge should be used to minimize and mitigate the harm. These studies do not ask and are not meant to answer, “Is nuclear worth it?” When the world already has multiple alternatives–not just in renewable technologies, but also in conservation strategies and improvements in energy efficiency–the answer is already “No.”

A version of this story previously appeared on Truthout; no version may be reprinted without permission.

You must be logged in to post a comment.